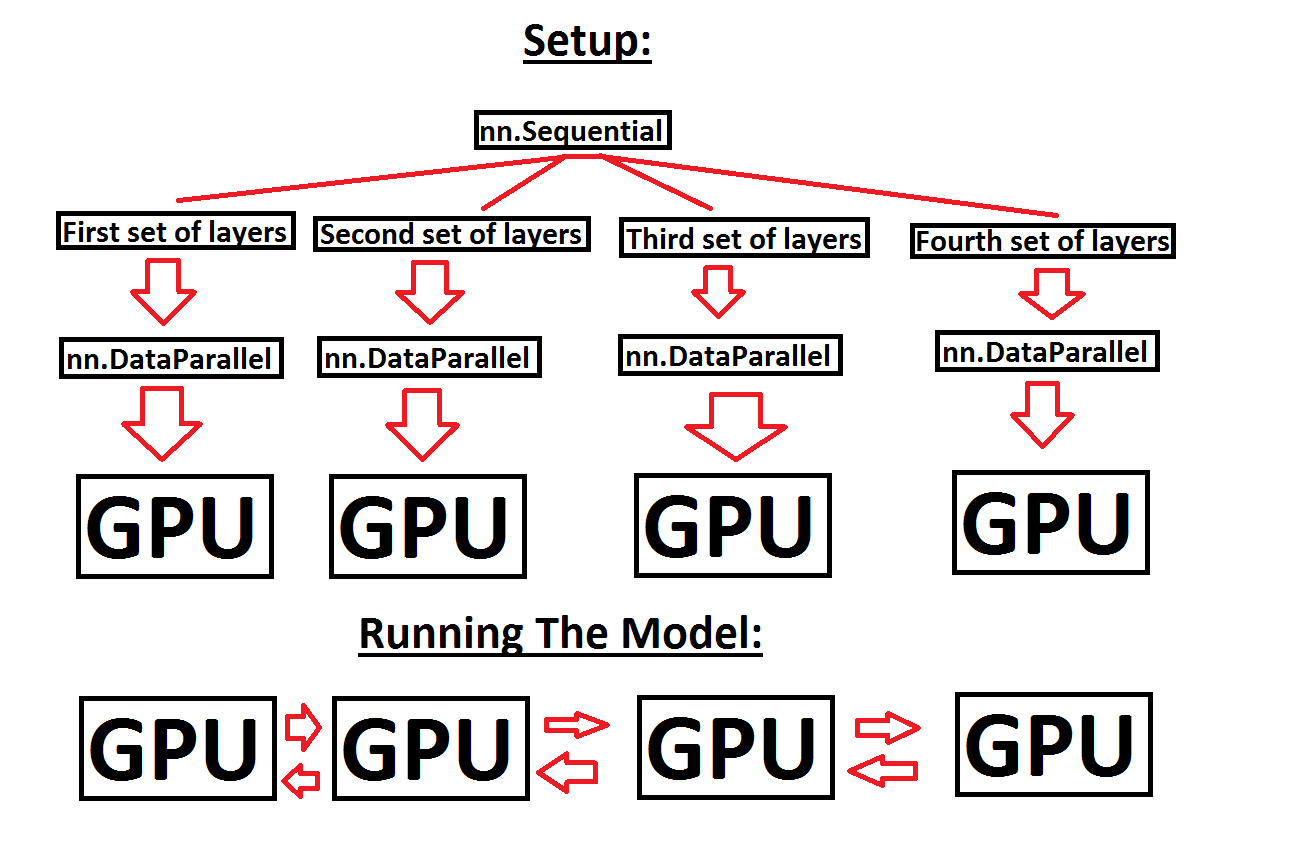

Help with running a sequential model across multiple GPUs, in order to make use of more GPU memory - PyTorch Forums

MONAI v0.3 brings GPU acceleration through Auto Mixed Precision (AMP), Distributed Data Parallelism (DDP), and new network architectures | by MONAI Medical Open Network for AI | PyTorch | Medium

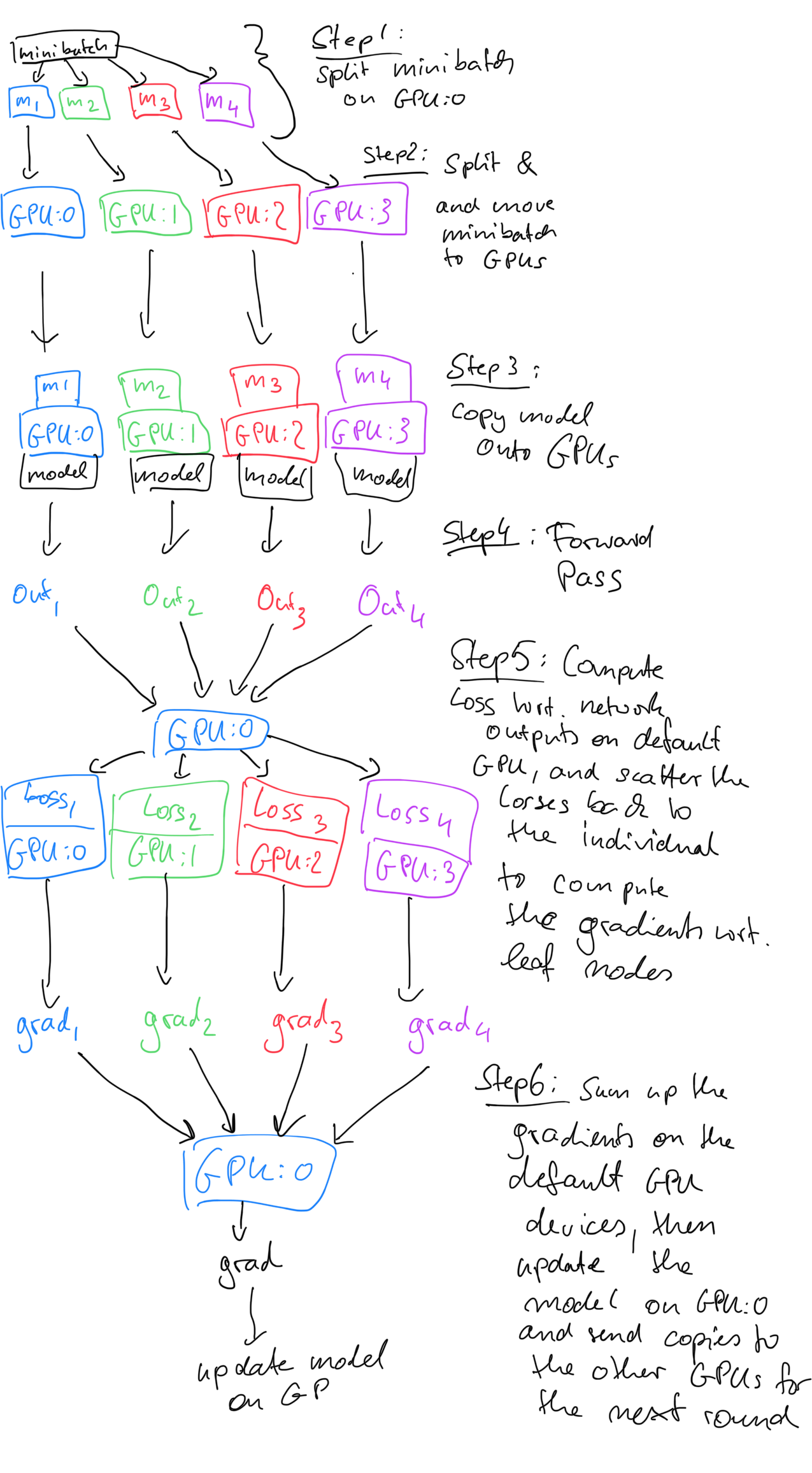

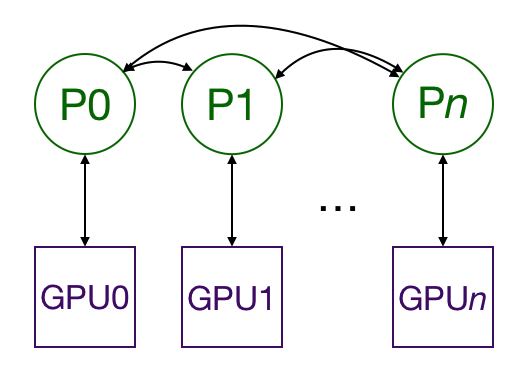

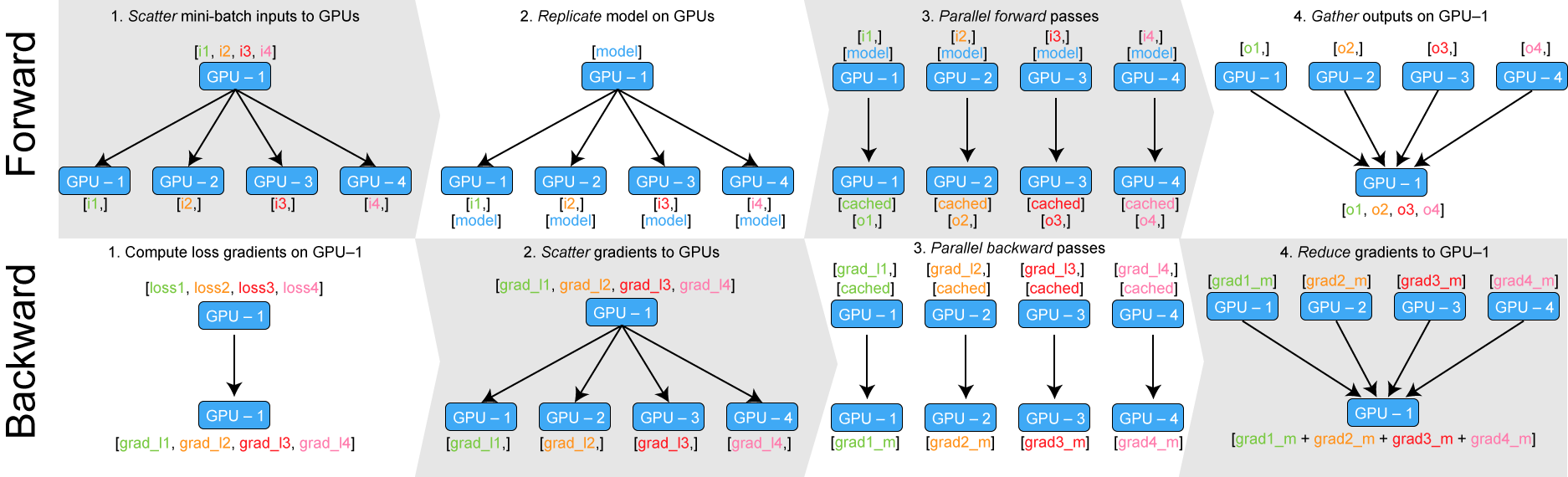

How distributed training works in Pytorch: distributed data-parallel and mixed-precision training | AI Summer

PyTorch-Direct: Introducing Deep Learning Framework with GPU-Centric Data Access for Faster Large GNN Training | NVIDIA On-Demand

Imbalanced GPU memory with DDP, single machine multiple GPUs · Discussion #6568 · PyTorchLightning/pytorch-lightning · GitHub